LangChain's origin story

What is LangChain?

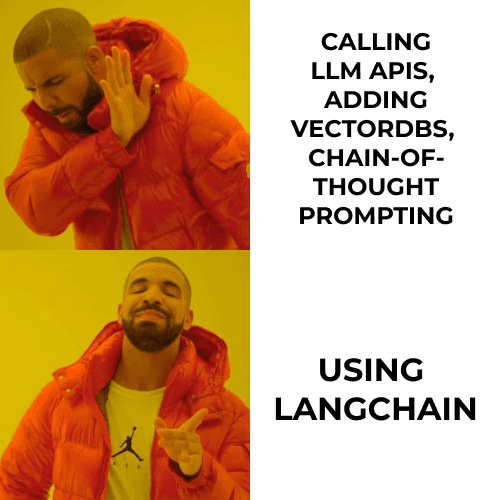

LangChain was built as the easiest way for a developer to go from idea to demo for their AI application. Today, foundational LLM models are incredibly powerful. But by themselves, they aren’t a product. Depending on the use case, they need context, specific prompting, and fine-tuning to effectively execute the task they’re set against. That’s where LangChain comes in.

Building an AI application in 2023

Let’s start by talking about what it takes to build an AI product today.

In the past, companies who wanted to build AI products had to hire ML engineers, collect data, and train proprietary models.

Now, with foundational LLMs from companies like OpenAI and Google, companies can skip that with just an API call. Instead, the steps to develop an AI feature have become:

- choose model

- prompt engineer, and

- add context/fine tune:

Not only is it now faster to build an AI feature, it’s also 10x cheaper. However, LLMs also come with their own complexities and bring a new subset of problems. These include:

- Which model to use: GPT-4 is the most capable, but it’s also the most expensive.

- Integrating the right context: A major problem with LLMs is hallucinations. When the model has insufficient background knowledge, it makes things up.

- Finding the right prompts: OpenAI’s GPT models were trained in a different manner than Facebook’s Llama. This difference shows up when you give the same prompt to both models and see drastically different outputs.

- Model continuity: Some models are eventually deprecated or discontinued, leading to significant losses for applications built atop those models. For example, going from GPT-3 to GPT-4 is an upgrade, but if a team has already built some specific infrastructure around GPT-3, then their previous work needs to be scrapped due to the differences between models.

LangChain the product

LangChain holds the promise to solve many of these problems. Harrison Chase, CEO, describes the company as building the open-source framework for building context-aware reasoning applications.

Let’s break that down.

- Open-Source: Anyone can copy, modify, and contribute to the codebase. In the world of AI, where changes are measured in days or even hours rather than years, being able to leverage the power of the crowd as a non-foundational model company is a fundamental competitive advantage.

- Context-aware: Provide a source of context for the language model. For example, if I run a restaurant and use an LLM, a customer might ask if we serve a specific dish. Without context, the LLM would hallucinate an answer based on the data it was trained on.

- Reasoning: Break down a larger problem into smaller subcomponents. If a customer asks whether the restaurant serves gluten-free options, that decomposes to a chain of smaller questions, like: “what are the ingredients in our dishes?” “what foods have gluten in them?,” and “is there any overlap between foods with gluten and dish ingredients?”

To date, LangChain offers three main products for developers looking to jump into building AI applications.

Orchestration

Today, most LLMs in isolation aren’t enough to be standalone products. They can solve basic queries but fall short when asked to do things like running a customer service agent for a restaurant. They need specific techniques like chain-of-thought prompting, fine-tuning, and Retrieval Augmented Generation (RAG).

LangChain’s orchestration product solves this with an easy way to chain together the base foundation model, prompt sequences, document loader, text splitter (chunking text to fit token limits), and vector databases. By assembling all of these pieces together, developers can quickly build an application that has real utility.

Further, by building the orchestration layer, LangChain plays well with almost all the players within the AI ecosystem. Whether a developer works with OpenAI or Anthropic, Supabase or Pinecone, Elasticsearch or Faiss, they can find all of these integrations on LangChain.

Standardization

“When I build stuff with GPT-3, especially in the earlier days, I get the strong impression that it's like we are doing machine learning without Numpy and Pandas. With LangChain, many of the systems I have built can be done in just one or two lines, making life much easier for rest of us.”

^ The first comment under LangChain’s 10 million seed round announcement on Hackernews talks about the less visible product of LangChain, its standardization engine.

Today, if a developer wants to switch from OpenAI’s GPT models to Anthropic’s Claude models—say, due to uncertainty around a company’s internal governance—they’d need to rewrite significant parts of their application and relearn how to prompt the model. Specifically, Anthropic’s documentation includes “Configuring GPT Prompts for Claude” with tips such as, “Claude has been fine-tuned to recognize XML tags within prompts… This allows Claude to compartmentalize a prompt into distinct parts.”

For developers looking to experiment with dozens of different LLMs to find the right one for their application, it’s simply not feasible to figure out of the right prompts for each model.

This is especially difficult now that AI companies are building out model-specific features like function calling and JSON mode.

That’s where LangChain comes in. Developers using LangChain’s product can swap out components of their LLM infrastructure with nothing more than changing a few lines of code. Since LangChain dynamically handles things like function calling and output validation, you don’t need to use LLM-specific features.

// From LangChain itself

On top of that, LangChain hosts an open-source collection of prompts, agents, and chains for developers to quickly get up to speed on any model they’re looking to use. One of the top resources is a prompt that adds sequential function calling to models other than GPT-0613. To use it, developers merely need to write:

[object Object],[object Object],[object Object],[object Object],[object Object],[object Object],[object Object],[object Object],[object Object],[object Object],[object Object]

LangSmith

The final leg of LangChain’s product is LangSmith, a unified platform for debugging, testing, evaluating, and monitoring LLM applications. It’s a mouthful, but a step-change for LangChain’s capabilities.

One of the biggest issues with LLMs is the fact that they’re a black box. Developers give it an input and then pray that the output is correct. Most of the time, the output is great, and it all feels like magic. But every so often, the model will output something that breaks the illusion. For most AI applications, getting things right 80% or even 90% of the time isn’t enough.

LangSmith’s goal is to bridge the difference between a demo that works in most cases to a production application that works in all cases.

To do this, the LangSmith product allows developers to see the inputs and outputs at each step of a model run and the exact sequence of runs (debug), write tests to check if the response was intended behavior (test), collect input/output examples to further refine the model (evaluate), and log latency, token usage, and feedback (monitor).

At a higher level, LangSmith lowers the barrier for developers to build great AI products. Instead of having to run through extensive testing, they can instead rely on LangSmith to further optimize their application.

Bringing it all together

With these three products, LangChain solves many of the new problems that have emerged with the prevalence of LLMs. In the process, they’ve gone from a simple orchestration layer for LLMs to becoming the infrastructure for developers looking to build LLM applications. And, as it introduces products like LangServe, it’s once again solving new LLM problems that appear as the technology matures.

The Founding Story

Now that we’ve talked about LangChain and its product, let’s go back to the start, when Harrison Chase was still an engineer at Robust Intelligence and ChatGPT hadn’t taken over the world yet.

In early 2022, while attending a company hackathon, Harrison built a chatbot that could query internal data from Notion and Slack. That work would eventually lead to Notion QA, an open-source project where users could ask questions to internal Notion databases in natural language.

Then, as the year wore on, he attended meetups in SF where the beginnings of an AI ecosystem were building. Stable Diffusion had ignited interest in image generation, and GPT-3 was starting to show promise for real-world applications.

At these meetups, Harrison consistently saw common, duplicative abstractions that developers had to build on top of LLMs to make them useful. And that pain point became the idea for LangChain, an open-source projects to simplify these abstractions.

The project started on October 16th with a fairly simple PR - “add initial prompt stuff”. It was just two Python files to ingest prompts for LLMs and a couple of JSON test files. He kept working on it and launched the project on October 25th after adding “more documentation”.

To kick things off, he published a Tweet thread for the project. It saw success, adding about 100 GitHub stars over the coming days to the project, and that was enough for Harrison to leave his job and go all in on LangChain in November.

Open tweet->

The timing was perfect. On November 30th, ChatGPT came out and brought the first spike of developers to LangChain. In December, the month after ChatGPT’s launch, LangChain tripled in traction from 584 to 1,413 stars.

And so in January, Harrison made things official by recruiting Ankush Gola, a former coworker at Robust Intelligence, and incorporated the company.

Once again, it was the right time with the right product and the right environment. Mid-January, as ChatGPT’s effects were being felt around the world, a video demonstrating how ChatGPT could combine with Wolfram Alpha was released by Dr Alan D. Thompson. More importantly, the video included a reference to LangChain in the title and description. Immediately afterward, LangChain’s popularity skyrocketed, and the project added 839 stars in a single day.

That was enough for LangChain to reach breakout velocity. The repository added 80-200 stars per day, or roughly 10x the growth from before the video.

Finding Product-Market Fit (PMF)

How does an AI company know if it has PMF? In a space that sees new updates on almost a daily basis, this kind of thing can be hard to define. Companies like Jasper saw a large spike in their users as awareness of AI tools grew, but also had a lot of churn. Broadly, much of the spend on AI tools today is speculative, with companies allocating budget for experimentation rather than committing to long-term investments.

Open tweet->

Further, there’s still rapid development of the underlying technology of foundational models. GPT-4 is the most capable model on the market, beating ChatGPT’s GPT-3.5 significantly despite being released only 5 months later. With each new model, application-layer companies need to re-innovate their product to maintain their competitive edge.

And so, for AI companies, perhaps the best metric to understand PMF is community and product stickiness, two things that LangChain is really good at.

For applied AI companies, the only reliable advantage that guarantees success from model to model is distribution. Given how nascent the space is, community is the best proxy for the distribution power of the company.

LangChain’s community is one of the strongest in the AI space. Given that the initial break for LangChain came from an educational YouTube video, the company today invests quite heavily in educational content. LangChain’s CEO, Harrison, has made multiple appearances on hour-long videos made in conjunction with other AI companies such as Pinecone and Weaviate. More recently, he’s worked with Andrew Ng on a course, Functions, Tools, and Agents with LangChain.

LangChain also has maintained its presence on YouTube, with community members publishing multiple crash course tutorials and other more in depth guides. There’s even an entire page in their documentation dedicated to these videos. Beyond Youtube, LangChain’s discord has 35,000 members while its subreddit, r/langchain, has 10,000 members.

For developers who are more text-based learners, LangChain partnered with Pinecone to offer a handbook with chapters on everything from prompt templates to AI agents.

As a result of all of these efforts, the first step for any developer looking to build an AI application is to give LangChain a spin. After bumping into limitations, they might look for alternatives, but LangChain’s position is one where the users are theirs to lose rather than having to fight for the incremental developer.

It worked. ChatGPT opened its API access on March 1st. Then, GPT-4 was released on March 14th. Growth surged. And by the end of March, the repository doubled in stars compared to the start of the month. 8k stars to 18k. April saw the same momentum, ending with 32k stars.

In April, LangChain announced a $10 million seed round led by Benchmark, followed by an unconfirmed $20m round led by Sequoia one week later.

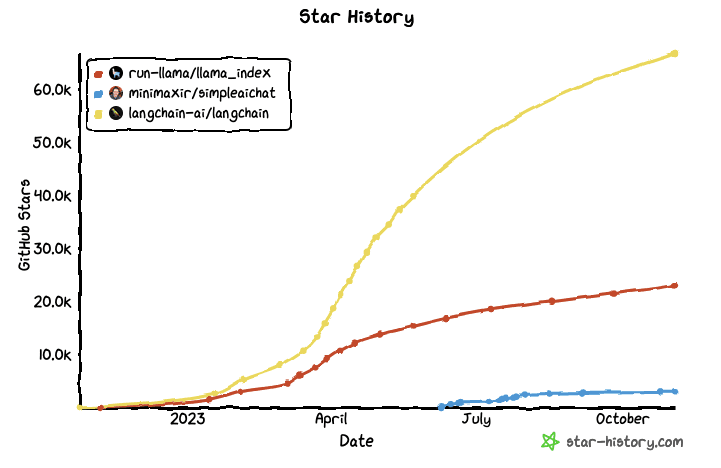

The second metric to look at is product stickiness. In other words: are alternatives to LangChain gaining traction? The answer to that question is no. While there are definite criticisms of LangChain, developers have continued to rely on LangChain for their LLM infrastructure. Competitors such as llama_index simply haven’t seen the same level of growth as LangChain, even accounting for time-lag. Even I built my own library of utils for building agents on GPT, gpt-agent-utils. It currently has 4 stars on GitHub 😅.

Where we go from here

The amount of progress that LangChain has made in just the span of one year is inspiring. To have built a product, community, and branding with developers shows not only the promise of the LangChain team but also of the potential of the AI space. At the same time, the youthfulness of the company has been a double-edged sword. A couple of popular criticisms of LangChain include:

- Limitations with LangChain: Although products like LangSmith significantly raise the ceiling for developers using LangChain, large enterprise teams that are using open-source models often choose to build their own LLM infrastructure rather than use LangChain. That’s because while LangChain makes it effortless to get started, it still comes with its own set of abstractions and dependencies that can a hamper a product’s long-term development.

- Documentation and Structure: Because of how quickly the space moves and its open-source nature, documentation is a major challenge with LangChain. Various developers have vocally commented on the problem.

But above these shortcomings, is a question of how AI itself will develop. At the moment, foundational models are still improving and there’s still a wide open space for developers to apply existing models on specific niches.

With innovation in the space continuing, each distinct layer of AI companies would focus on their specific use case. Foundation model companies like OpenAI improve their model. Vector DB companies compete on performance and other metrics. In this world, LangChain’s position continues to strengthen. The ROI for other companies to invest heavily in integrations with non-LangChain orchestrators is less than the opportunity they have in optimizing their own product.

All in all, LangChain has won the hearts and minds of most AI developers. And with new products like LangSmith that inch developers closer to deploying production AI applications with LangChain, the future may well be one where LangChain sits at the center of AI development.

Invite only

We're building the next generation of data visualization.

My 9 core Mac apps and utilities

Tom Johnson

How Supabase became this generation’s database

Max Musing

Report: The 2023 State of Databases

Max Musing

Basedash on the main stage at Collision 2023

Max Musing

Basedash raises $4.5M led by Matrix

Max Musing

6 Retool Alternatives for 2023 - A Comprehensive Guide

Jordan Chavis